Introduction

The field of gesture recognition seeks to bridge the gap between human intent and digital action, teaching machines to understand the silent, fluent language of our hands or face. The ultimate goal of gesture recognition is to make technology an invisible and responsive extension of our will.

This article traces the technical history of gesture recognition, a journey from cumbersome, wired-up contraptions to the sophisticated, AI-powered systems of today, examining the key technological shifts that have defined this fascinating field.

What is Gesture Recognition?

Gesture recognition is a technology that detects and interprets human movementsinto digital commands. It uses a combination of cameras, sensors, and artificial intelligence to translate natural motions into machine-readable signals. If a machine requires special devices or markers, like gloves with sensors, for tracking, it’s called marker-based recognition. Similarly, if the machine uses only cameras (2D or 3D) to track bare-hand gestures without physical aid, it’s called markerless gesture recognition.

The Early Era of Gesture Recognition

The first serious attempts at gestural input emerged from the nascent field of virtual reality in the 1980s. In this era, the approach to gesture recognition was not to see a gesture, but to measure it directly. This required users to wear their interfaces, physically tethering them to a machine.

The most iconic device of this period was the VPL DataGlove. It was a pioneering piece of hardware that translated hand and finger movements into digital data, representing a significant first step in the history of gesture recognition.

The VPL DataGlove, circa 1988, was a pioneering device that used fiber optics and magnetic sensors for early mechanical gesture recognition.

Finger Flexion

Along the knuckles of each finger ran specially treated fiber-optic cables. When a user bent their finger, the cable would curve, causing a quantifiable amount of light to leak. A photosensor measured this light loss, providing a direct analog reading of the finger's flexion.

Position and Orientation

To locate the hand in 3D space, an electromagnetic tracking sensor (like those from Polhemus) was mounted on the back of the glove. This tracker generated a low-frequency magnetic field, and a nearby receiver could calculate its precise position (X, Y, Z) and orientation (yaw, pitch, roll).

This combination of sensors provided a direct stream of 6-Degrees-of-Freedom (6-DOF) data, allowing for the direct manipulation of simple 3D objects. It was a brute-force solution, but it proved that real-time gesture recognition was viable.

The Vision-Based Revolution in Gesture Recognition

The ultimate goal was to liberate users from these physical tethers. The community envisioned a future where an inexpensive camera could perform gesture recognition, a concept artistically explored by pioneers like Myron Krueger with his "Videoplace" installations. However, moving from tracking a simple 2D silhouette to understanding a nuanced 3D hand in a cluttered, real-world scene was a monumental computer vision challenge.

The late 1990s and early 2000s became a hotbed of innovation for markerless gesture recognition. This era was defined by a move towards statistical modeling and machine learning to interpret pixels, with several key approaches emerging concurrently.

-

A Snapshot of the Era (c. 2001)

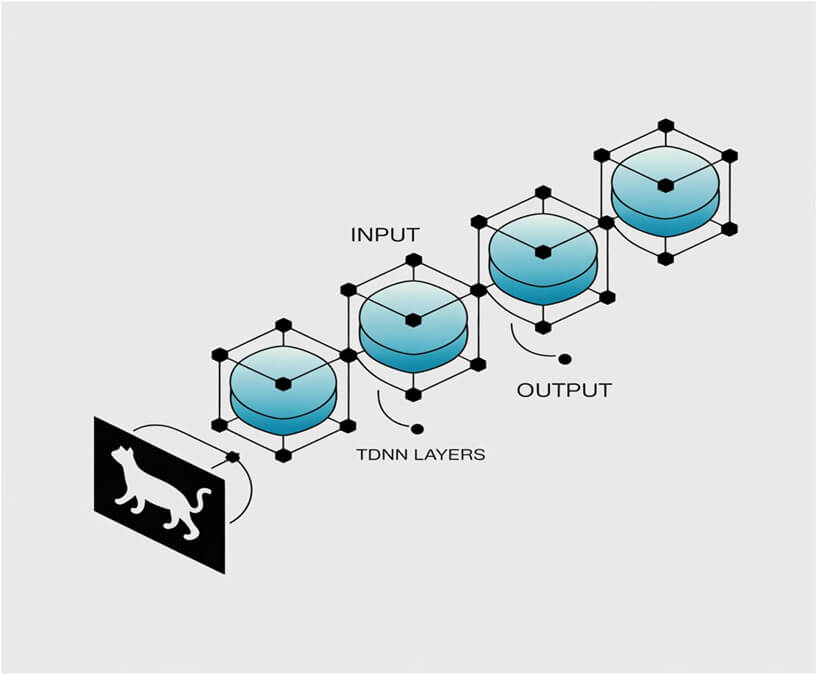

One significant contribution came from Ming-Hsuan Yang and Narendra Ahuja in their 2001 paper, Face Detection and Gesture Recognition for Human-Computer Interaction. Their work offers a perfect technical snapshot of the sophisticated, multi-stage pipelines of the time. Their algorithm first isolated motion, then used a probabilistic statistical model of human skin color to identify hands. Finally, to classify the motion, they employed a Time-Delay Neural Network (TDNN), an architecture well-suited for recognizing the temporal patterns inherent in gestures.

The input layer captures sequential hand or body movement data, then learns the temporal patterns in these sequences, enabling the output layer to accurately classify the performed gesture.

However, this was one of several crucial advancements in vision-based gesture recognition. During the very same period, the Viola-Jones object detection framework was introduced. While primarily famous for enabling real-time face detection, its principles of using Haar-like features and a cascade of classifiers created a model of computational efficiency that profoundly influenced the entire field, including gesture recognition. These parallel developments show the era was attacking the problem from multiple angles; some focused on detailed temporal classification, others on sheer detection speed.

-

Start of Modern Era in Gesture Recognition (2015-2025)

The current era of gesture recognition is defined by one force: deep learning. The cultural and technical shift began around 2012, when a model named AlexNet shattered records in the ImageNet image classification challenge. This "ImageNet moment" proved that deep neural networks, trained on massive datasets, could outperform hand-crafted systems. This revolution quickly rippled out from static images to the more complex domain of video and gesture recognition. The painstakingly designed features of the past were replaced by end-to-end models that learned directly from raw pixel data.

-

The Transformer Era (2025 - Present)

Most recently, the Vision Transformer (ViT) architecture, which revolutionized natural language processing, has been adapted for video. Unlike RNNs that process frames sequentially, Transformers use a mechanism called "self-attention." This allows the model to look at all frames of a gesture at once and weigh the importance of every frame relative to every other frame. This is incredibly powerful for gesture recognition, as it can capture complex, long-range dependencies-for instance, understanding that the initial hand shape at the beginning of a sign language gesture is critical for interpreting its conclusion seconds later.

The RGB-D Shift

For all their progress, 2D vision systems still struggled with ambiguity. The depth perception problem was elegantly solved for the mass market in 2010 with the release of the Microsoft Kinect.

The Kinect was an affordable RGB-D (Red, Green, Blue + Depth) sensor. It operated by projecting a pattern of thousands of infrared dots into the environment and capturing the distortion of that pattern with an infrared camera. This allowed it to generate a real-time "depth map."

The Microsoft Kinect sensor provided real-time depth data, enabling robust skeletal tracking that revolutionized gesture recognition in gaming and beyond.

This was a paradigm shift for gesture recognition. Instead of inferring 3D structure, developers could start with a 3D point cloud. This made tasks like separating a person from their background trivial and, most importantly, enabled highly robust tracking that could track the 3D position of key body joints, making complex, full-body gesture recognition a reality for consumers.

Final Thoughts

The history of gesture recognition shows a clear trajectory toward greater naturalness and the dissolution of barriers between user and machine. We have evolved from wearing our interfaces to being understood by complex computational models that learn. Foundational vision-based work was a critical bridge that proved the feasibility of a markerless world and defined the core problems for the field. Today, the pursuit of more advanced gesture recognition continues. The ultimate goal remains unchanged: to make our interaction with technology as effortless and invisible as a simple wave of the hand.

FAQs

A static gesture is a single, stationary hand pose, such as a "thumbs-up." A dynamic gesture is defined by its movement over time, such as waving goodbye. Both are sub-fields of gesture recognition.

Marker-based recognition requires the user to wear a device with sensors or visible markers. Markerless gesture recognition uses only a camera to track the user's bare hands, which is the standard for modern consumer technology.

The Kinect was revolutionary because it was the first affordable consumer device to provide real-time 3D depth data. This solved major problems in computer vision and dramatically accelerated the development of robust and reliable gesture recognition systems.

State-of-the-art systems typically use a two-stage deep learning process. A Convolutional Neural Network (CNN) analyzes spatial features in video frames, and then a Recurrent Neural Network (RNN) or a Transformer model analyzes the temporal sequence of those features to classify the complete gesture.

Future advancements in gesture recognition include higher-fidelity hand and finger tracking for fine-motor tasks in AR/VR, combining gesture with eye-tracking for more context-aware commands, and developing systems that can learn a user's personal gestures on the fly.

Comments