Introduction

Deepfakes began as internet novelties. Five years ago, most people encountered them in viral clips —celebrities singing songs they never recorded or politicians mouthing words they never spoke. They were curiosities, often dismissed as harmless digital play.

That landscape has collapsed. The same technology used for humour now fuels financial fraud, corporate espionage, and geopolitical misinformation. In 2023 alone, Europol reported that 20% of identity fraud cases in Europe involved some form of synthetic media. In Hong Kong, a multinational firm lost $25 million in a single deepfake-enabled scam when attackers impersonated a CFO on a video call.

The challenge is sobering: if AI can fake us better than we can prove ourselves, what becomes of trust? The answer lies in multimodal biometric systems; an architectures that combine several streams of biological and behavioural data, raising the bar for attackers and rewriting the rules of digital security.

Why Unimodal Biometrics Are Vulnerable to Deepfakes

For years, a photograph of our face or a recording of our voice was considered a reliable key to our digital kingdoms. That paradigm has collapsed. The vulnerability of systems that rely on a single biological trait is their simplicity.

The internet has become a vast repository of the raw materials needed to forge these keys. A high-resolution photo from social media or a voice clip from a video call is all an AI needs to begin creating a convincing digital puppet. Traditional systems that only ask, "Does this face match the one on file?" are asking a question that a sophisticated deepfake is now designed to answer with a convincing "yes." This creates a critical vulnerability, a single point of failure that fraudsters have learned to exploit with devastating efficiency. These systems are susceptible to noisy data, spoofing, and other issues that reduce accuracy.

What Are Multimodal Biometric Systems?

A multimodal biometric system is an advanced security technology that verifies a person's identity by analyzing two or more independent biological or behavioral traits simultaneously. Instead of relying on just a face (visual) or just a voice (auditory), these systems integrate facial features, voice patterns, and even underlying physiological signs to create a comprehensive and highly secure identity profile.

The core principle is that while a fraudster might be able to fake one biometric trait, it is exponentially more difficult to convincingly fake multiple, unrelated traits in perfect synchrony. This layered approach overcomes the limitations of unimodal systems, leading to greater accuracy and security.

For a large-scale biometric project like citizen identification, biometric data of millions of people are required to be captured and stored. This can be achieved using Biometric De-duplication software such as MULTI MABIS. Let's understand how it defends against deepfakes.

How Multimodal Systems Defend Against Deepfakes

The true power of multimodal biometric systems lies in their forensic approach. This deepfake detection technology is engineered not just to match a pattern, but to actively hunt for the subtle yet damning evidence of digital forgery across different modalities.

1. Visual Clues

To unmask a deepfake, the system first analyzes the visual stream for involuntary tells that AI generators often fail to replicate perfectly. This involves extracting key visual features using a battery of forensic tools.

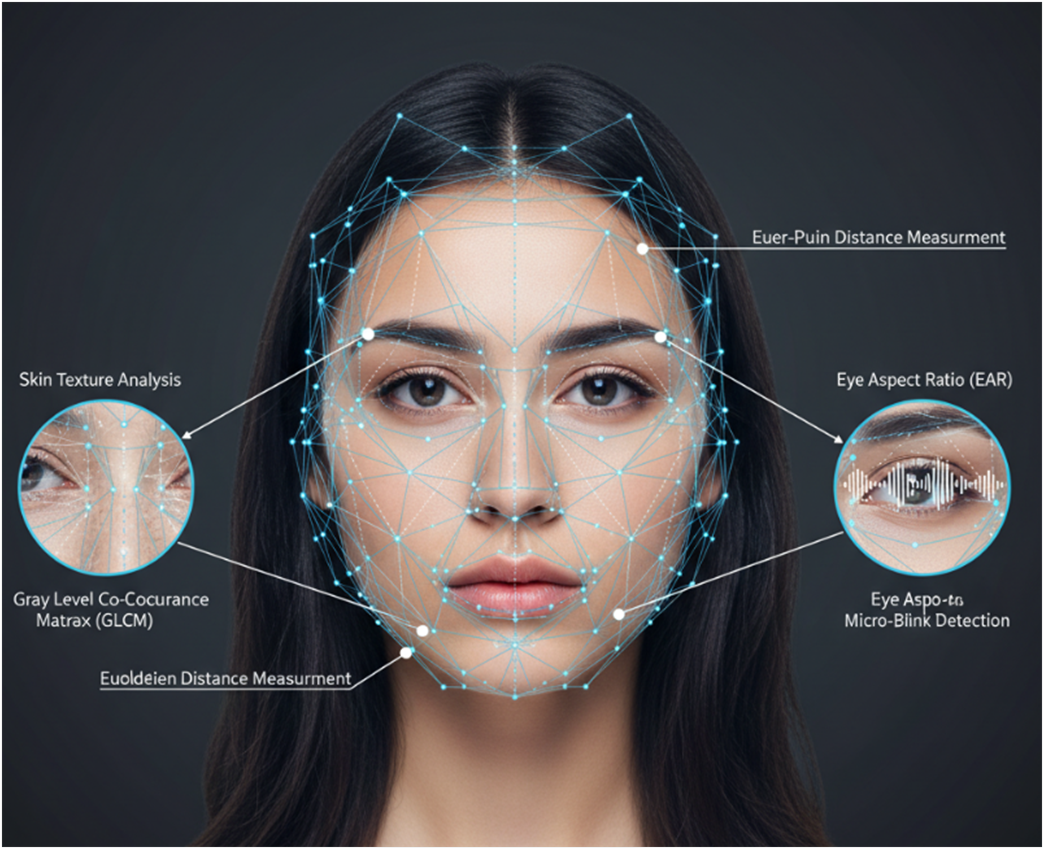

Facial Landmark and Geometry Analysis:

The process often begins by identifying a Region of Interest (ROI) using algorithms like Haar Cascade, a machine learning-based method that can detect objects in images. From there, solutions like MediaPipe's FaceMesh map out 468 distinct 3D facial landmarks in real-time. Investigators then measure the Euclidean distance between these points to check for geometric consistency. Is the distance between the pupils stable? Do the cheekbones maintain a natural height relative to the chin? AI models often introduce subtle, unnatural warping in these areas.

Involuntary Biological Indicators:

A living person blinks, and their head moves in minute, unconscious ways. The system tracks the eye aspect ratio to detect irregular blinking patterns, a common artifact in older deepfakes. Furthermore, it analyzes headpose by tracking movement along the x, y, and z axes, looking for the jittery or unnaturally smooth motions that betray a digital puppet.

Skin Tone and Texture Analysis:

A crucial and innovative technique involves analyzing skin. Using the oRGB color space, which separates brightness from color channels, the system can detect microscopic color fluctuations. It also uses a Gray Level Co-Occurrence Matrix (GLCM) to analyze skin texture, measuring contrast and correlation. Real skin has a complex, imperfect texture, whereas deepfakes often exhibit an unnaturally smooth or inconsistent texture that these algorithms can flag.

Most multimodal systems have built-in algorithm which performs a checklist of all known distinct facial landmarks like Haar Cascade.

2. Auditory Fingerprints

Just as a deepfake video has visual tells, synthetic audio has its own unique acoustic fingerprints. The system's auditory analysis is designed to spot these giveaways with forensic precision.

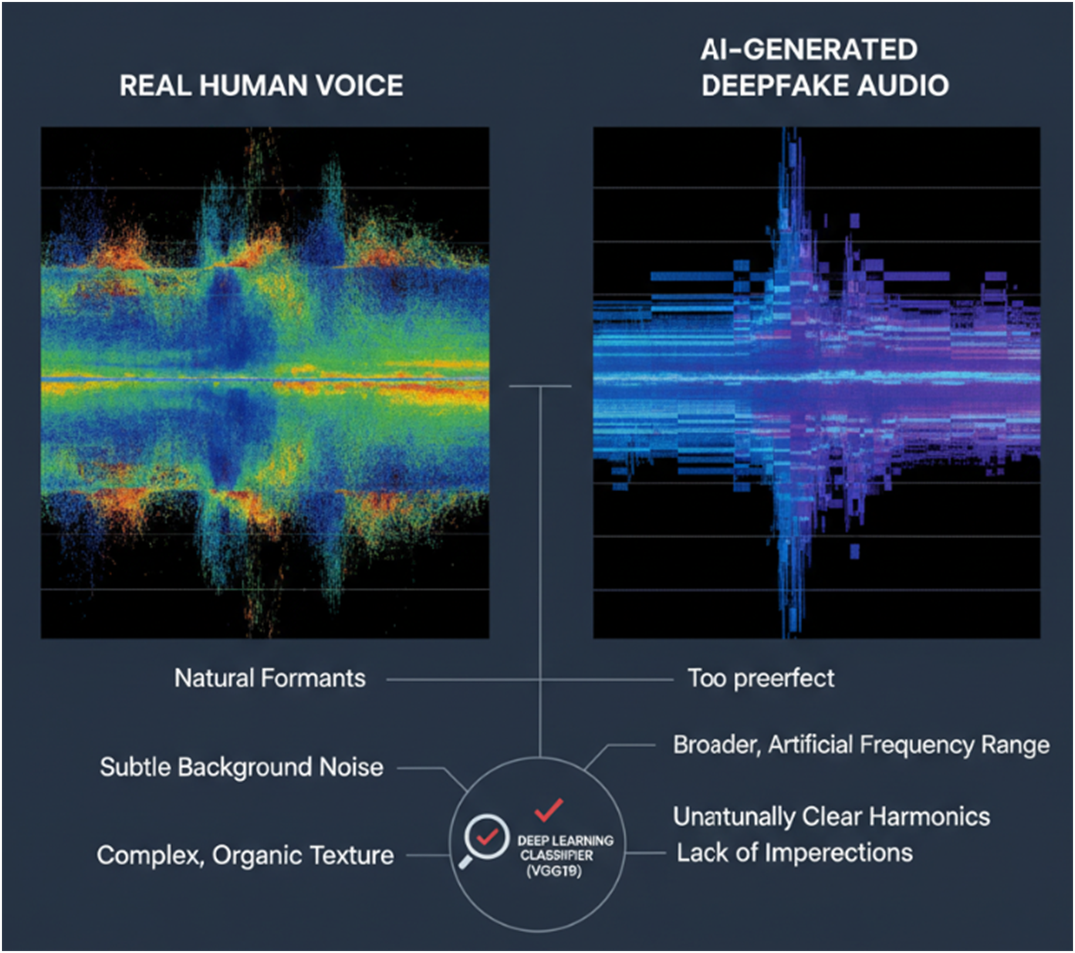

Mel-Spectrogram Analysis:

The primary tool for this is the mel-spectrogram, a detailed visual representation of an audio signal. It converts sound into a 2D image showing how frequencies change over time, using the mel scale to better represent how humans perceive pitch. Human speech, with its imperfections and background noise, creates a specific type of spectrogram. In contrast, deepfake audio often exhibits a broader frequency range, unnaturally clear harmonics, and a lack of the subtle imperfections of a real human voice.

A deep learning classifier employs powerful deep learning models which converts sound into a 2D image showing how frequencies change over time, and a lack of the subtle imperfections of a real human voice.

Deep Learning Models in Action:

To classify these spectrograms, security architects employ powerful deep learning models. A pre-trained Convolutional Neural Network (CNN) like VGG19, originally trained on the ImageNet dataset for image classification, has proven exceptionally effective. By using transfer learning, the model leverages its vast knowledge to become highly adept at spotting the spatial patterns within spectrograms that scream "fake," achieving high accuracy in tests.

Biometric Liveness Detection with rPPG

Perhaps the most critical defense is biometric anti-spoofing, or liveness detection, which verifies that the system is interacting with a living person, not a recording or an avatar.

The most advanced form of this is remote Photoplethysmography (rPPG). This algorithm analyzes the video feed of a user's face to detect the microscopic color changes caused by blood flowing through their capillaries. This technique allows for the remote measurement of a user's heart rate by observing the subtle shifts in light reflection from the skin as blood volume changes with each heartbeat.

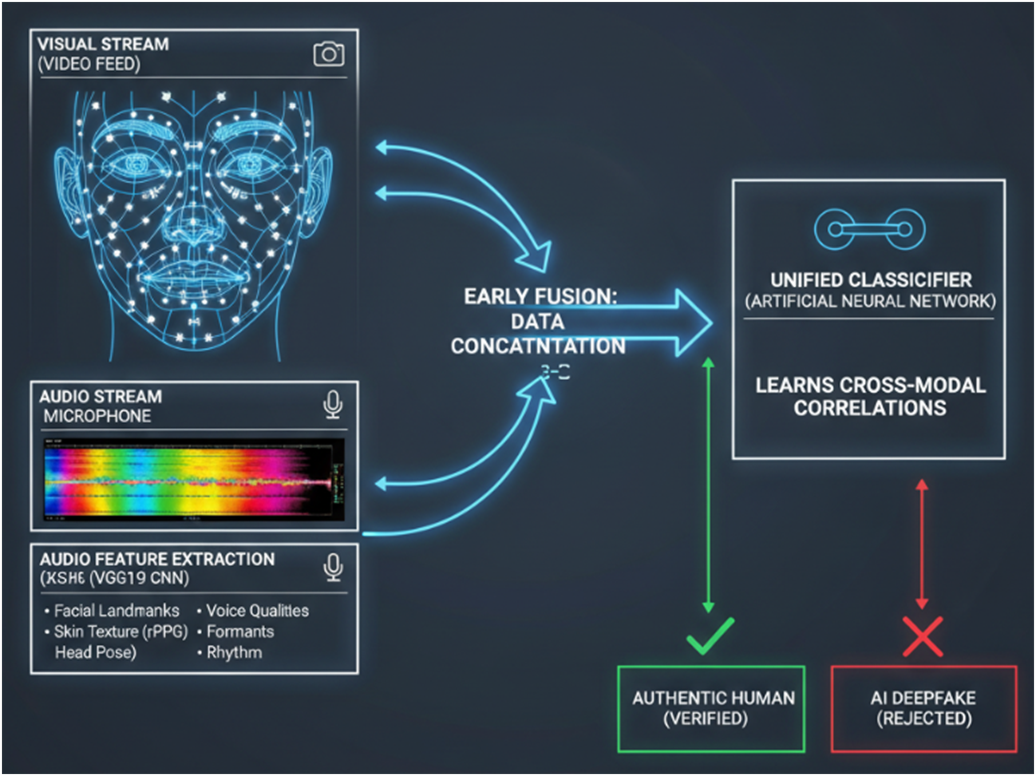

Combining Visual and Audio Clues

The true power of this technology lies in its ability to fuse evidence from both the visual and auditory streams to form a single, robust judgment. The method of fusion dramatically impacts performance.

The most effective strategy is Early Fusion, also known as feature-level fusion. In this approach, the feature vectors extracted from the video and the audio are concatenated at the very beginning of the process. This combined data stream is then fed into a single, unified classifier.

This method is superior because it allows the model to learn the deep, inter-modal relationships from the outset. It can ask questions like, "Does the movement of the lips perfectly correlate with the specific sounds being produced?" By analyzing these cross-modal correlations, the Early Fusion model can detect the minute desynchronizations and inconsistencies that are the Achilles' heel of even the most advanced deepfakes.

A feature vectors are being extracted from the video and the audio at the very beginning and then fed into a single, unified classifier.

Validating Deepfake Detectors for Real-World Effectiveness

A lab demonstration is one thing; real-world effectiveness is another. To ensure these systems are not just academic exercises, they are subjected to rigorous cross-dataset testing. This means a model is trained on one set of data and then tested on completely different, unseen datasets.

Model trained on Dataset A.

This process is critical because it proves the system's ability to detect new types of fakes it has never encountered before. The results are compelling. Even when trained on separate, single-mode datasets, a multimodal system using Early Fusion consistently outperforms its unimodal counterparts, achieving high AUC scores (a measure of a classifier's ability to distinguish between classes) in the wild. This proves the system is not just memorizing fakes; it is learning the fundamental principles of what makes media authentic.

Understanding Presentation Attacks (PAs) and the ISO Standard

The technical term for using a fake artifact to fool a biometric scanner is a Presentation Attack (PA). A unimodal system, which checks only one trait, is uniquely vulnerable to PAs.

A high-resolution photo or video can fool a basic facial recognition system.

A silicone fingerprint mold can bypass many consumer-grade scanners.

An AI-generated voice clone can defeat a voiceprint system.

The International Organization for Standardization (ISO) even has a standard for this (ISO/IEC 30107), which classifies the complexity of different PAs. Many deepfakes now qualify as sophisticated PAs that basic unimodal systems are simply not equipped to detect.

The International Organization for Standardization (ISO) defines a framework for evaluating these threats in the ISO/IEC 30107 standard. This standard classifies the complexity of different PAs and outlines testing methodologies for Presentation Attack Detection (PAD) technologies, ensuring they are vetted against known threats. Many deepfakes now qualify as sophisticated PAs that basic unimodal systems are simply not equipped to detect.

Multimodal vs. Multi-Factor Authentication (MFA)

These terms are often confused but describe different security concepts.

Multi-Factor Authentication (MFA) combines different categories of authentication: something you know (password), something you have (phone/token), and something you are (a biometric).

Multimodal Biometrics is a highly advanced implementation of the "something you are" category, using multiple biological identifiers within that single factor.

A system requiring a fingerprint and a PIN is MFA. A system requiring a fingerprint and an iris scan is a multimodal biometric system.

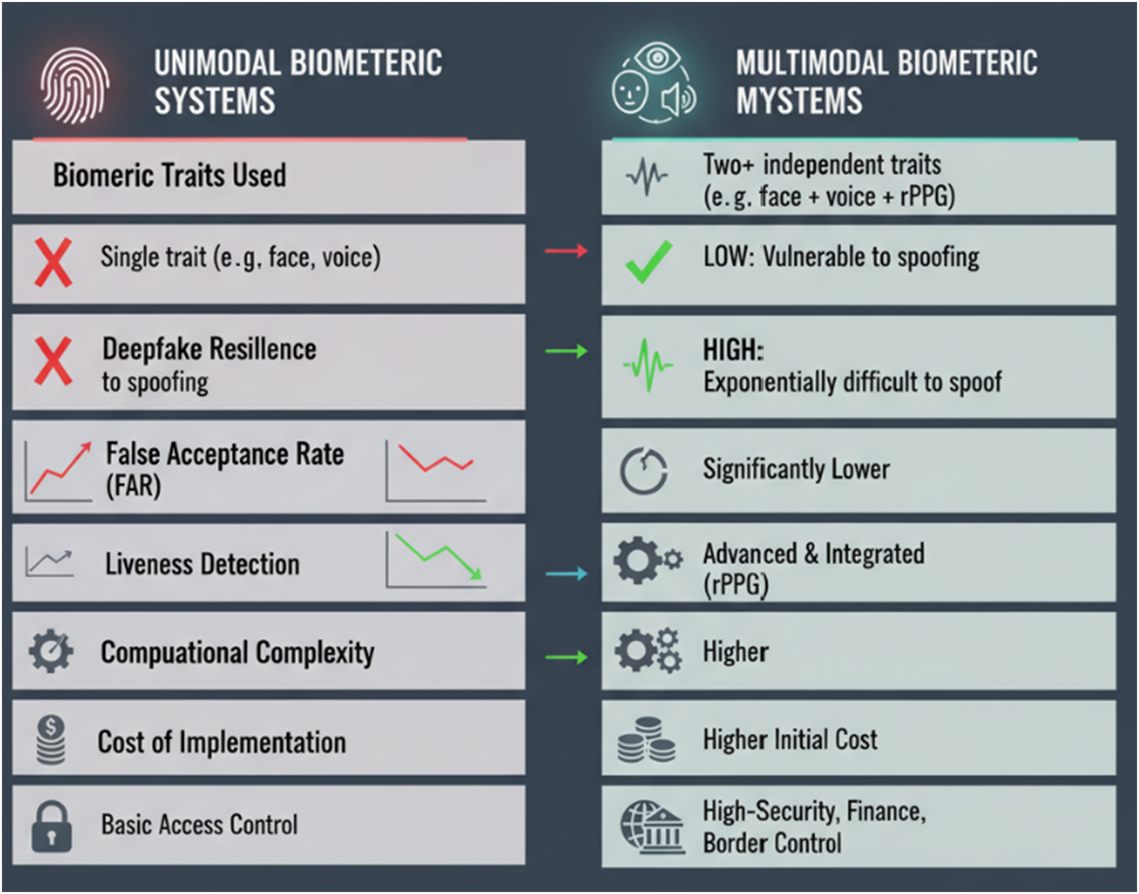

Unimodal vs. Multimodal Biometric Systems: A Data-Driven Comparison

The advantages of a multimodal approach become clear when compared directly with single-modality systems.

| Feature | Unimodal Biometric System | Multimodal Biometric System |

|---|---|---|

| Security Level | Lower; vulnerable to spoofing of a single trait. | Higher; an attacker must spoof multiple, unrelated traits simultaneously. |

| Accuracy | Prone to errors from poor-quality data (e.g., a scarred fingerprint, low light for face ID). | Significantly more accurate; if one trait fails, others can still verify the user, reducing false rejections. |

| Reliability | Lower; a single point of failure can lock out a legitimate user. | Higher; offers more flexibility and lessens the chance of failure-to-enroll issues. |

| Vulnerability to PAs | High. A photo, video, or voice recording can often defeat the system. | Very Low. Defeating multiple, synchronized liveness checks is exponentially more difficult. |

| User Convenience | Can be high if the single trait is easily captured, but frustrating if it fails. | High, with adaptive fusion allowing the system to use the best available data. |

| Cost & Complexity | Generally lower cost and simpler to implement. | Higher initial cost and complexity due to multiple sensors and fusion algorithms. |

Unimodal Biometric Systems vs Multimodal Biometric Systems

Real-World Applications

The adoption of multimodal biometric security is growing across sectors where trust and identity are critical.

Secure Access

In a real-world corporate setting, companies are enhancing security and automating attendance by integrating cloud-based HR software with Mantra's facial recognition technology directly into their flap-barrier entry systems. As an employee approaches the gate to their floor, a device scans their face for instant verification. Upon successful identification, the system simultaneously grants access by opening the barrier and automatically clocks them in, feeding the time and attendance data directly to the central server via an API.

Border Control

Airports and government agencies deploy these systems to verify traveler identities with high accuracy, strengthening national security. Airports in Madrid and Barcelona, Spain, have implemented quick access border control systems for European citizens. These systems use a combination of facial and fingerprint recognition to verify a traveler's identity against their electronic passport, speeding up the immigration process. Similarly, Kuwait is rolling out a multimodal biometric system that includes face, fingerprint, and iris scanning to enhance its border security and immigration procedures.

Healthcare

Hospitals use multimodal biometrics for accurate patient identification, preventing medical identity theft and ensuring patient records are secure. The Martin Health System in Florida integrated an iris and facial recognition system to address challenges with duplicate medical records and patient fraud. This touchless solution allows for quick and accurate patient identification, ensuring that the correct medical records are accessed and updated, which is crucial for patient safety.

Financial Services

Banks and fintech companies use multimodal systems to secure mobile banking apps and authorize high-value transactions, preventing fraud. For instance, a bank in Indonesia utilizes fingerprint authentication to allow supervisors to quickly and securely authorize transactions initiated by tellers.

Final Thoughts

The battle against deepfakes is an ongoing arms race. The future of identity verification and digital security will rely on continuous innovation. Future research is focused on optimizing these complex models for real-time detection on everyday devices and expanding datasets to include more diverse forgeries.

Technically, the next step involves incorporating models like Recurrent Neural Networks (RNNs) and LSTMs to better analyze temporal patterns over time. Researchers are also exploring attention mechanisms to dynamically weigh the importance of visual versus audio clues. The ultimate goal is to create a universally accessible, highly accurate, and transparent system that can serve as a trusted guardian of our shared digital reality.

Comments